Design and Development of a Wearable Robotic Vision System with Stabilized Imaging for Terrain Classification in Lower Limb Prostheses

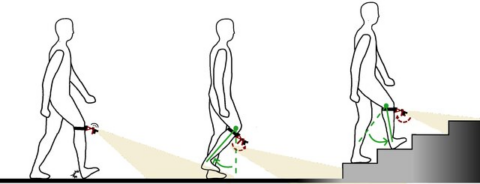

Powered lower limb prostheses have gathered attention for their potential to restore mobility and improve the quality of life for humans. However, controlling these robotic devices in various applications is challenging as it generally requires the coordination of effective motion control and the detection of human intent. This challenge is particularly pronounced in dynamic environments requiring rapid adaptation to varying terrain surfaces and inclinations, as well as different tasks, such as turning or ascending/descending stairs. On-board camera-based solutions can be employed to acquire environmental information, which can then be used to plan control tasks accordingly. However, as with many camera-based robotic applications, real-time stabilization is critical. The motion of the prosthesis and vibrations caused by walking can result in blurry, poorly focused images, rendering them unreliable. This thesis aims to explore, design, and develop a camera stabilization system for terrain classification in lower limb prostheses.

More information can be found here.

Rodrigo J. Velasco-Guillen, M.Sc.

Department of Electrical Engineering

Chair of Autonomous Systems and Mechatronics

- Phone number: +49 9131 85-23145

- Email: rodrigo.velasco@fau.de